I think that one day, this blog will have the ability to generate random bits of goodies...

1. Hacking your sleep

2. Ok... it's called REPL: Read-eval-print loop

3. "Kick Ass" JS Game

4. D0z.me DDoS fun

5. Essays by Paul Graham

6. Dropbox's application to Y Combinator

7. Jeff Dean's Keynote: Designs, Lessons and Advice from Building Large Distributed Systems

8. Superstition in the pigeon

9. Understanding Node.js (tutorial)

10. JSLint, and lint in general

Thursday, December 23, 2010

Saturday, December 04, 2010

The Full-Stack Programmer

Old idea formalized in a catchy new term: read more about The Full-Stack Programmer.

It will be interesting if this idea gains traction.

It will be interesting if this idea gains traction.

Wednesday, October 27, 2010

An algorithm for finding cut vertices

Related Readings: Biconnected Component

Note: kind of similar to finding strongly connected components

Special thanks to JJ & Brent for this!

Maximum subarray problem

This is one of those nice little problems that I used to know how to solve, but I have forgotten over time...

Problem Description: Given a array of n integers, (there can be negative integers), find the subarray which gives you the maximum sum in O(n) time.

Solution (Intuition): Observe that the smallest max sum is 0 (the solution subarray can be empty). Hence, we do not want to go below this number. A positive number contributes to increasing sum (so we take it in), but negative numbers decrease the sum. Hence, the solution subarray must start with a positive number. We have two variables, max_so_far and max_ending_here.

Solution (Code):

Problem Description: Given a array of n integers, (there can be negative integers), find the subarray which gives you the maximum sum in O(n) time.

Solution (Intuition): Observe that the smallest max sum is 0 (the solution subarray can be empty). Hence, we do not want to go below this number. A positive number contributes to increasing sum (so we take it in), but negative numbers decrease the sum. Hence, the solution subarray must start with a positive number. We have two variables, max_so_far and max_ending_here.

Solution (Code):

Friday, October 15, 2010

Another Ubuntu Upgrade

Just upgraded to Ubuntu 10.10, but not without a few glitches.

1. I did the usual alternate cd upgrade

2. Upgrade froze with about 20% left. Restarted computer but could not boot into X.

3. Decided to use recovery option in grub boot menu

4. Manually mount the alternate cd iso, and ran sudo ./cdromupgrade from terminal. This miraculously solved all problems. It appears to "resume" the upgrade process.

Conclusion: running ./cdromupgrade from cmd line seems like a good idea for future upgrades. Its definitely faster too. It might also be a good idea to defer the Internet update until the upgrade is finished.

1. I did the usual alternate cd upgrade

2. Upgrade froze with about 20% left. Restarted computer but could not boot into X.

3. Decided to use recovery option in grub boot menu

4. Manually mount the alternate cd iso, and ran sudo ./cdromupgrade from terminal. This miraculously solved all problems. It appears to "resume" the upgrade process.

Conclusion: running ./cdromupgrade from cmd line seems like a good idea for future upgrades. Its definitely faster too. It might also be a good idea to defer the Internet update until the upgrade is finished.

Sunday, September 26, 2010

Ext 4 on Windows

Sunday, September 05, 2010

Sunday, August 29, 2010

Grub 2

I just realized that the newer Ubuntu versions use Grub 2 instead of the older Grub. And in Grub 2, there are some significant changes (see here):

Note to self:

1. /boot/grub/menu.lst no longer exists, and this has been replaced by /boot/grub/grub.cfg and /boot/default/grub.

2. To edit the display settings, edit /etc/default/grub, and then run sudo update-grub, which will generate /boot/grub/grub.cfg

- Scripting support including conditional statements and functions

- Dynamic module loading

- Rescue mode

- Custom Menus

- Themes

- Graphical boot menu support and improved splash capability

- Boot LiveCD ISO images directly from hard drive

- New configuration file structure

- Non-x86 platform support (such as PowerPC)

- Universal support for UUIDs (not just Ubuntu)

Note to self:

1. /boot/grub/menu.lst no longer exists, and this has been replaced by /boot/grub/grub.cfg and /boot/default/grub.

2. To edit the display settings, edit /etc/default/grub, and then run sudo update-grub, which will generate /boot/grub/grub.cfg

Monday, August 23, 2010

Unit Testing

Some slides on why unit testing is soooo important! (only opens correctly in Firefox)

There are also some slides on mock objects.

Also observe the brilliant use of XUL+JS+CSS to create a browser based presentation engine.

There are also some slides on mock objects.

Also observe the brilliant use of XUL+JS+CSS to create a browser based presentation engine.

Saturday, August 21, 2010

War Story

Once in a while, we run into those pesky, irritating harddisk issues -- out of disk space on one partition, a few fat GBs of disk space on another partition.

Fortunately, there exist a cheap, simple, and neat trick to resolve such an issue on linux. The approach is as follows:

1. Perform the operations using root permissions.

$ su root

2. Do a recursive copy of the folder you want to copy.

Note that we use the -r flag for recrusive copy, and the --preserve flag to preserve important file attributes such as ownership and timestamp.

$ cp -r --preserve=all SOURCE TARGET

3. Delete the source folder

4. Create a symbolic link in place of the source folder.

$ ln -s TARGET DIRECTORY

Fortunately, there exist a cheap, simple, and neat trick to resolve such an issue on linux. The approach is as follows:

1. Perform the operations using root permissions.

$ su root

2. Do a recursive copy of the folder you want to copy.

Note that we use the -r flag for recrusive copy, and the --preserve flag to preserve important file attributes such as ownership and timestamp.

$ cp -r --preserve=all SOURCE TARGET

3. Delete the source folder

4. Create a symbolic link in place of the source folder.

$ ln -s TARGET DIRECTORY

Friday, August 13, 2010

Monday, August 09, 2010

C10k problem

The C10k problem is the name given to a limitation that most web servers currently have which limits the web server's capabilities to only handle about ten thousand simultaneous connections. This limitation is partly due to operating system constraints and software limitations.

Source: Wikipedia

Sunday, August 08, 2010

Design Patterns

High-level ideas:

- Lazy initialization

- Singleton/Multiton

- Proxy/Facade

- Decorator

- Factory

- Observer

- Publisher/Subscriber

- Lazy initialization

- Singleton/Multiton

- Proxy/Facade

- Decorator

- Factory

- Observer

- Publisher/Subscriber

Friday, August 06, 2010

HipHop for PHP

HipHop for PHP transforms PHP source code into highly optimized C++. It was developed by Facebook and was released as open source in early 2010.

See this Facebook post and GitHub (source)

See this Facebook post and GitHub (source)

Wednesday, August 04, 2010

Gearman

Gearman provides a generic application framework to farm out work to other machines or processes that are better suited to do the work. It allows you to do work in parallel, to load balance processing, and to call functions between languages.

See Gearman.org

Monday, August 02, 2010

stdClass in PHP

stdClass is instead just a generic 'empty' class that's used when casting other types to objects. I don't believe there's a concept of a base object in PHP

For the record... courtesy of stackoverflow.com

Further reading: here and here.

Sunday, August 01, 2010

COW in PHP

Copy-on-write (sometimes referred to as "COW") is an optimization strategy used in computer programming. The fundamental idea is that if multiple callers ask for resources which are initially indistinguishable, they can all be given pointers to the same resource. This function can be maintained until a caller tries to modify its "copy" of the resource, at which point a true private copy is created to prevent the changes becoming visible to everyone else. All of this happens transparently to the callers. The primary advantage is that if a caller never makes any modifications, no private copy need ever be created.Recently, I was dealing with large data sets in PHP and I was wondering how PHP's garbage collection works. I discovered that PHP actually uses copy-on write (COW)!

Consider the following script, which demonstrates how PHP's GC works.

Note that before PHP 5.3.0, circular memory references result in memory leaks.

Vim Niceties

Some features that newbies may not know about:

1. Some default settings that I have found useful

In your .vimrc file:

set tabstop=4

set number

set nocompatible

set smartindent

set shiftwidth=4

set mouse=a

Basically sets line numbers, smart indentation, width of tabstop to be 4, and allow the use of the mouse for inserting text.

2. Omnicompletion

In your .vimrc file, add:

filetype plugin on

set oft=syntaxcomplete#Complete

Hit Control-P to see the completion menu pop up when editing code.

3. Auto highlight red whenever you exceed 80 chars in one line

highlight OverLength ctermbg=red ctermfg=white guibg=#592929

match OverLength /\%81v.\+/

1. Some default settings that I have found useful

In your .vimrc file:

set tabstop=4

set number

set nocompatible

set smartindent

set shiftwidth=4

set mouse=a

Basically sets line numbers, smart indentation, width of tabstop to be 4, and allow the use of the mouse for inserting text.

2. Omnicompletion

In your .vimrc file, add:

filetype plugin on

set oft=syntaxcomplete#Complete

Hit Control-P to see the completion menu pop up when editing code.

3. Auto highlight red whenever you exceed 80 chars in one line

highlight OverLength ctermbg=red ctermfg=white guibg=#592929

match OverLength /\%81v.\+/

Saturday, July 31, 2010

Mozilla

Some stuff about Mozilla (Mozilla as in Mozilla, not only Firefox):

-- Rapid Application Development with Mozilla, Nigel McFarlane (2003)

Also, a thing or two about Firefox add-ons development:

Useful tools:

Extension Developer

DOM inspector

XPCOM Viewer

Useful Websites:

Mozilla Developer Network (MDN)

Mozilla Cross-Reference

It is highly standards compliant, supporting many standards from bodies such as the W3C, IETF, and ECMA.

Mozilla is built on a very big source code base and is far larger than most Open Source projects. It is 30 times larger than the Apache Web Server, 20 times larger than the Java 1.0 JDK/JRE sources, 5 times bigger than the standard Perl distribution, twice as big as the Linux kernel source, and nearly as large as the whole GNOME 2.0 desktop source—even when 150 standard GNOME applications are included.

As a result of the browser wars in the late 1990s, the platform has been heavily tested and is highly optimized and very portable. Not only have hundreds of Netscape employees and thousands of volunteers worked on it, but millions of end users who have adopted Mozilla-based products have scrutinized it as well.

-- Rapid Application Development with Mozilla, Nigel McFarlane (2003)

Also, a thing or two about Firefox add-ons development:

Useful tools:

Extension Developer

DOM inspector

XPCOM Viewer

Useful Websites:

Mozilla Developer Network (MDN)

Mozilla Cross-Reference

Wednesday, July 28, 2010

Friday, July 16, 2010

Power Sockets as Internet Ports

A friend pointed out this interesting product to me: 200 Mbps Powerline Ethernet Adapter.

See also: Power line communication

Here's how it works:

See also: Power line communication

Tuesday, July 13, 2010

Anatomy of LVM

Note to self:

PV: physical volume, VG: volume group, LV: logical volume

hda1 hdc1 (PV:s on partitions or whole disks)

\ /

\ /

diskvg (VG)

/ | \

/ | \

usrlv rootlv varlv (LV:s)

| | |

ext2 reiserfs xfs (filesystems)

PV: physical volume, VG: volume group, LV: logical volume

Monday, July 12, 2010

Qualitative Research & Grounded Theory

This is a summary of 5 chapters from the book Basics of Qualitative Research: Techniques and Procedures for Developing Grounded Theory (2nd edition), by Anselm Strauss and Juliet Corbin.

Chapter 09: Axial Coding

This chapter describes the process of Axial Coding. Axial Coding is defined as "the process of relating categories to their subcategories". The objective here is to "form precise and complete explanations about phenomena" by linking the categories and subcategories. The chapter also introduces the traditional concept of "paradigm" (think Kuhn). The term "actions/interactions" is also introduced, seemingly as a replacement for the concept of "cause/effect", which can be contentious in scientific and philosophical literature (think Hume -- induction/deduction). But of course, the basis for this is based on the standard argument that the over-analytical use of "cause/effect" do not faithfully represent the many phenomena that goes on in the natural sciences. Finally, the authors propose the use of mini-frameworks and recording techniques to facilitate the coding process. Such frameworks can be simply diagrams (think Microsoft Visio).

Chapter 10: Selective Coding

Selective Coding is the "process of integrating and refining the theory". The book lays down the criteria for choosing a central category. The chapter again reinforces the use of diagramming to help with the analysis and representing the data abstractly. The practice of using memos is also introduced as a useful tool that guides the thinking process. Lastly, the process of refining the theory is explained. The techniques discussed for refining include filling up poorly developed categories, dropping ideas that do not fit (trimming the theory), validating the schema, identifying potential outliers, and appreciating the complexity and variety of data (building in variation.

Chapter 11: Coding for Process

In this chapter, "process" is defined as "sequences of evolving action/interaction... [that] can be traced to changes in structural conditions." An easy way to think about this is reducing process to phases or stages. Processes not only involve the flow and problems/issues, but also the conditions and the forms that the problems take. Process can also be broken down into subprocesses, which can be further broken down into action/interactional tactics. We can categorize the steps in a process, and to look at the process using a micro or macro viewpoint.

Chapter 12: The Conditional/Consequential Matrix

This chapter introduces the conditional/consequential matrix as "an analytical device to simulate analysts' thinking about the relationships between macro and micro conditions / consequences both to each other and to process." In justifying the matrix, the authors make several important assertions: (1) conditions and consequences are not independent, (2) Micro and macro conditions are often intertwined (3) conditions and consequences exist in clusters (4) action/interaction are not limited to individuals, but are caused by global bodies/organizations as well. The matrix is just an Archimedean spiral, where dark lines are used to denote interaction, and the spaces between the lines are the sources of conditions/consequences, and the arrows denote intersection structure with process.

Chapter 13: Theoretical Sampling

Theoretical sampling here is defined as the data gathering process that aims to create more categories. Sampling can be applied to open coding, axial coding and selective coding.

Chapter 09: Axial Coding

This chapter describes the process of Axial Coding. Axial Coding is defined as "the process of relating categories to their subcategories". The objective here is to "form precise and complete explanations about phenomena" by linking the categories and subcategories. The chapter also introduces the traditional concept of "paradigm" (think Kuhn). The term "actions/interactions" is also introduced, seemingly as a replacement for the concept of "cause/effect", which can be contentious in scientific and philosophical literature (think Hume -- induction/deduction). But of course, the basis for this is based on the standard argument that the over-analytical use of "cause/effect" do not faithfully represent the many phenomena that goes on in the natural sciences. Finally, the authors propose the use of mini-frameworks and recording techniques to facilitate the coding process. Such frameworks can be simply diagrams (think Microsoft Visio).

Chapter 10: Selective Coding

Selective Coding is the "process of integrating and refining the theory". The book lays down the criteria for choosing a central category. The chapter again reinforces the use of diagramming to help with the analysis and representing the data abstractly. The practice of using memos is also introduced as a useful tool that guides the thinking process. Lastly, the process of refining the theory is explained. The techniques discussed for refining include filling up poorly developed categories, dropping ideas that do not fit (trimming the theory), validating the schema, identifying potential outliers, and appreciating the complexity and variety of data (building in variation.

Chapter 11: Coding for Process

In this chapter, "process" is defined as "sequences of evolving action/interaction... [that] can be traced to changes in structural conditions." An easy way to think about this is reducing process to phases or stages. Processes not only involve the flow and problems/issues, but also the conditions and the forms that the problems take. Process can also be broken down into subprocesses, which can be further broken down into action/interactional tactics. We can categorize the steps in a process, and to look at the process using a micro or macro viewpoint.

Chapter 12: The Conditional/Consequential Matrix

This chapter introduces the conditional/consequential matrix as "an analytical device to simulate analysts' thinking about the relationships between macro and micro conditions / consequences both to each other and to process." In justifying the matrix, the authors make several important assertions: (1) conditions and consequences are not independent, (2) Micro and macro conditions are often intertwined (3) conditions and consequences exist in clusters (4) action/interaction are not limited to individuals, but are caused by global bodies/organizations as well. The matrix is just an Archimedean spiral, where dark lines are used to denote interaction, and the spaces between the lines are the sources of conditions/consequences, and the arrows denote intersection structure with process.

Chapter 13: Theoretical Sampling

Theoretical sampling here is defined as the data gathering process that aims to create more categories. Sampling can be applied to open coding, axial coding and selective coding.

Open Sampling (for open coding): taking an open (open as in open to possibilities) approach to data as it comes by.

Relational and variational sampling (for axial coding): sampling for events that show relations and variety among concepts

Discriminate Sampling (for selective coding): here the researcher selectively chooses data that will contribute most towards comparative analysis.

Lastly, the authors assert that sampling should be continued until "each category is saturated".

Sunday, July 11, 2010

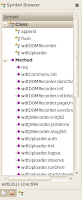

Gedit Symbol Browser Plugin

The Gedit Symbol Browser Plugin is a great plugin to get around large chunks of code quickly.

As the binary distribution for Unbuntu Linux hosted on sourceforge.net seems to be outdated/incompatible, I have downloaded the sources for gedit and the symbol browser and compiled them.

I am making the binary for the plugin available here.

Details: Compiled with Gedit 2.30.3 and gedit-symbol-browser-0.1 on Ubuntu Lucid 10.04 i386

Installation:

$ cp gedit-symbol-browser-plugin*.tar.gz ~/.gnome2/gedit/

$ cd ~/.gnome2/gedit/

$ tar -xzf gedit-symbol-browser-plugin*.tar.gz

$ rm gedit-symbol-browser-plugin*.tar.gz

Note: you need to have exuberant-ctags installed.

$ sudo apt-get install exuberant-ctags

As the binary distribution for Unbuntu Linux hosted on sourceforge.net seems to be outdated/incompatible, I have downloaded the sources for gedit and the symbol browser and compiled them.

I am making the binary for the plugin available here.

Details: Compiled with Gedit 2.30.3 and gedit-symbol-browser-0.1 on Ubuntu Lucid 10.04 i386

Installation:

$ cp gedit-symbol-browser-plugin*.tar.gz ~/.gnome2/gedit/

$ cd ~/.gnome2/gedit/

$ tar -xzf gedit-symbol-browser-plugin*.tar.gz

$ rm gedit-symbol-browser-plugin*.tar.gz

Note: you need to have exuberant-ctags installed.

$ sudo apt-get install exuberant-ctags

Saturday, July 10, 2010

Thursday, July 08, 2010

Java Code Coverage for Eclipse

Java coders doing JUnit testing should install this.

http://www.eclemma.org/

http://www.eclemma.org/

A more advanced code coverage tool is Clover, which you can try for [continuous] fully functional 30-day trial.

Sunday, July 04, 2010

Creating a Launcher in Ubuntu

Here is how to create a launcher in Ubuntu, using the old school approach.

Say we are calling the launcher "Awesome". On your desktop, create a new file "Awesome.desktop". Put this in the file (ascii):

Finally, chmod +x Awesome.desktop

Say we are calling the launcher "Awesome". On your desktop, create a new file "Awesome.desktop". Put this in the file (ascii):

#!/usr/bin/env xdg-open

[Desktop Entry]

Encoding=UTF-8

Version=1.0

Type=Link

Icon[en_US]=gnome-panel-launcher

URL=file:///home/hbovik/awesome_stuff/

Name[en_US]=Awesome

Name=Awesome

Icon=gnome-panel-launcher

Finally, chmod +x Awesome.desktop

Friday, July 02, 2010

Zarro Boogs

By design, Bugzilla is programmed to return the string "zarro boogs found" instead of "0 bugs found" when a search for bugs returns no results. "Zarro Boogs" is a facetious meta-statement about the nature of software debugging. Bug tracking systems like Bugzilla readily describe how many known bugs are outstanding. The response "zarro boogs", is intended as a buggy statement itself (a misspelling of "zero bugs"), implying that even when no bugs have been identified, software is still likely to contain bugs that haven't yet been identified.

Saturday, June 26, 2010

Apache Directives

Note to self (.htaccess):

To set 403 Forbidden access

Order Deny,Allow

Deny from all

To enable CGI scripts

Options +ExecCGI

AddHandler cgi-script cgi pl

And remember to chmod 777

if you want mkdir permissions

To add type

AddType application/xhtml+xml .xhtml

File specific

<files somefile.txt>

# your stuff here

</files>

Directory specific

<Directory /some/path/>

# your stuff here

</Directory>

List Directory

Options +Indexes

PHP specific

php_flag display_errors on

php_value memory_limit 12M

To set 403 Forbidden access

Order Deny,Allow

Deny from all

To enable CGI scripts

Options +ExecCGI

AddHandler cgi-script cgi pl

And remember to chmod 777

if you want mkdir permissions

To add type

AddType application/xhtml+xml .xhtml

File specific

<files somefile.txt>

# your stuff here

</files>

Directory specific

<Directory /some/path/>

# your stuff here

</Directory>

List Directory

Options +Indexes

PHP specific

php_flag display_errors on

php_value memory_limit 12M

Saturday, June 12, 2010

Sunday, May 30, 2010

Federation

I recently attended a talk on Hadoop by Robert Chansler. One interesting concept I picked up was the concept of "federation" or simply "playing nice with users".

In the context of Hadoop, this means achieving backward compatibility with legacy versions of the software. E.g. when users run "old code" in clusters with a newer Hadoop version, their code can run correctly without modifications.

Titbit about Hadoop: the next Hadoop milestone will focus on security.

In the context of Hadoop, this means achieving backward compatibility with legacy versions of the software. E.g. when users run "old code" in clusters with a newer Hadoop version, their code can run correctly without modifications.

Titbit about Hadoop: the next Hadoop milestone will focus on security.

Friday, May 21, 2010

Scientific Paradigms

Foreword: This post is a follow up from a previous post on the Forth Paradigm.

The Four Paradigms and some examples:

1st Paradigm: Experimental

Conducting simple experiments to confirm hypothesis (e.g. Ben Franklin and the Kite Experiment), Young double slit experiment, chemical reactions, etc

2nd Paradigm: Theoretical

Theories of the Natural Sciences, Theoretical Computer Science,

3rd Paradigm: Computational Science

Engineering based fields, Solving games, Four color theorem, computer simulations, etc

4th Paradigm: Data-Intensive Research

Data mining, the schematic web, machine learning, distributed systems, the LHC, etc

Question: what makes the 4th paradigm compelling: how does it stack against the previous paradigms (e.g. efficiency, feasibility, etc)

For download: The Fourth Paradigm: Data-Intensive Scientific Discovery (the book)

The Four Paradigms and some examples:

1st Paradigm: Experimental

Conducting simple experiments to confirm hypothesis (e.g. Ben Franklin and the Kite Experiment), Young double slit experiment, chemical reactions, etc

2nd Paradigm: Theoretical

Theories of the Natural Sciences, Theoretical Computer Science,

3rd Paradigm: Computational Science

Engineering based fields, Solving games, Four color theorem, computer simulations, etc

4th Paradigm: Data-Intensive Research

Data mining, the schematic web, machine learning, distributed systems, the LHC, etc

Question: what makes the 4th paradigm compelling: how does it stack against the previous paradigms (e.g. efficiency, feasibility, etc)

For download: The Fourth Paradigm: Data-Intensive Scientific Discovery (the book)

Thursday, May 20, 2010

Bell's Law of Computer Classes

Bell's Law of Computer Classes formulated by Gordon Bell in 1972 describes how computer classes form, evolve and may eventually die out. New classes create new applications resulting in new markets and new industries

Friday, May 14, 2010

@Override in Java

@OverrideIndicates that a method declaration is intended to override a method declaration in a superclass. If a method is annotated with this annotation type but does not override a superclass method, compilers are required to generate an error message.

Tip of the day: The @Override annotation is a useful feature to ensure that you are overriding the correct method. Mostly used in places such as:

@Override

public int hashCode()

@Override

public boolean equals(Object obj)

Ubuntu Lucid Lynx

Just upgraded to Ubuntu Lucid Lynx... but not without a few cheap tricks along the upgrading process.

1. Upgrade Using the Alternate CD

I upgraded using the alternate CD, then did a partial upgrade via the Internet. To upgrade using an alternate CD, I downloaded the alternate CD, mount it:

$ sudo mount -o loop ~/path/to/alternate/cd/ubuntu-10.04-alternate-i386.iso /media/cdrom0

Then hit Alt-F2, and ran gksu "sh /cdrom0/cdromupgrade"

This is probably the fastest upgrading method.

2. Not enough disk space... oops!

Ironically, I did not have enough disk space on my ext4 partition to do the partial upgrade via the Internet. After some Googling, I found a really neat trick:

$ sudo su

$ cd /var/cache/apt

$ mv archives archives-orig

$ ln -s /media/AnotherPartition archives

$ mkdir archives/partial

Run the update manager, and complete the upgrade process. After installing:

$ rm archives

$ mv archives-orig archives

3. Getting Nvidia drivers to work again

I had some difficulty getting the Nvidia 195.36.24 driver to work again as the installation script failed. I followed the instructions on ubuntugeek.com to solve the problem.

Specifically, in /etc/modprobe.d/blacklist.conf,

Adding the following lines helped:

blacklist vga16fb

blacklist nouveau

blacklist rivafb

blacklist nvidiafb

blacklist rivatv

4. Getting the window buttons back in the _right_ place

Although I had no problems adjusting to the left positioning of the minimize, maximize and close buttons, I found the change unappealing.

This guide clearly helped. One fact I wasn't aware of was that running "gconf-editor" was different from running "sudo gconf-editor" (the later meant a different thing).

5. Thunderbird 3

A fan of Thunderbird 2, I was disappointed at how Thunderbird 3 handled the IMAP messages. TD 3 attempted to download every single email message from the IMAP server (which quickly made me run out of disk space). Turning off synchronization did not help.

Fortunately, the following trick was useful:

In the config editor in TD 3 (Preferences->Advanced->General->Config Editor), set the following values to false

mail.server.default.autosync_offline_stores

mail.server.default.offline_download

6. Sounds and ALSA

I found that my sound device was unable to work after upgrade (i.e. no sound). After realizing the alsaconf had been removed from the latest alsa package. I figured that a simple remove and reinstall would do the trick:

$ sudo apt-get remove alsa-base

$ sudo apt-get install alsa-base

Conclusion

Lucid Lynx comes with major under the hood improvements (faster boot up, better system integration, etc). Lucid Lynx is definitely worth the upgrade.

1. Upgrade Using the Alternate CD

I upgraded using the alternate CD, then did a partial upgrade via the Internet. To upgrade using an alternate CD, I downloaded the alternate CD, mount it:

$ sudo mount -o loop ~/path/to/alternate/cd/ubuntu-10.04-alternate-i386.iso /media/cdrom0

Then hit Alt-F2, and ran gksu "sh /cdrom0/cdromupgrade"

This is probably the fastest upgrading method.

2. Not enough disk space... oops!

Ironically, I did not have enough disk space on my ext4 partition to do the partial upgrade via the Internet. After some Googling, I found a really neat trick:

$ sudo su

$ cd /var/cache/apt

$ mv archives archives-orig

$ ln -s /media/AnotherPartition archives

$ mkdir archives/partial

Run the update manager, and complete the upgrade process. After installing:

$ rm archives

$ mv archives-orig archives

3. Getting Nvidia drivers to work again

I had some difficulty getting the Nvidia 195.36.24 driver to work again as the installation script failed. I followed the instructions on ubuntugeek.com to solve the problem.

Specifically, in /etc/modprobe.d/blacklist.conf,

Adding the following lines helped:

blacklist vga16fb

blacklist nouveau

blacklist rivafb

blacklist nvidiafb

blacklist rivatv

4. Getting the window buttons back in the _right_ place

Although I had no problems adjusting to the left positioning of the minimize, maximize and close buttons, I found the change unappealing.

This guide clearly helped. One fact I wasn't aware of was that running "gconf-editor" was different from running "sudo gconf-editor" (the later meant a different thing).

5. Thunderbird 3

A fan of Thunderbird 2, I was disappointed at how Thunderbird 3 handled the IMAP messages. TD 3 attempted to download every single email message from the IMAP server (which quickly made me run out of disk space). Turning off synchronization did not help.

Fortunately, the following trick was useful:

In the config editor in TD 3 (Preferences->Advanced->General->Config Editor), set the following values to false

mail.server.default.autosync_offline_stores

mail.server.default.offline_download

6. Sounds and ALSA

I found that my sound device was unable to work after upgrade (i.e. no sound). After realizing the alsaconf had been removed from the latest alsa package. I figured that a simple remove and reinstall would do the trick:

$ sudo apt-get remove alsa-base

$ sudo apt-get install alsa-base

Conclusion

Lucid Lynx comes with major under the hood improvements (faster boot up, better system integration, etc). Lucid Lynx is definitely worth the upgrade.

Monday, April 12, 2010

Sunday, April 04, 2010

Emacs

On Fri, April 2, 2010 2:44 pm, Dan Kilgallin wrote:

I'd actually say that in general, forcing someone to use a particular

editor is pretty rude. However, most rational people I know who do

substantial amounts of programming agree that it is a very well-designed

editor. I would say that, as is the case with LaTeX, it is almost

certainly worth the learning curve. Personally, I prefer gedit on unix,

but I respect vim. I don't know anyone experienced who prefers emacs.

On Fri, April 2, 2010 1:29 pm, Ernesto Alfonso wrote:

> I'd say whoever forces anyone to use vim should be imprisoned as it is a

> form of torture.

>

> On Fri, April 2, 2010 11:27 am, Dan Kilgallin wrote:

>

>> No, I would not agree with that. For example, even in a war, nobody

>> should be forced to program using emacs.

>>

>> On Thu, April 1, 2010 11:34 pm, Ernesto Alfonso wrote:

>>

>>

>>> Although this isnt related to 251, we'd like the input of its TA's

>>> here. Would you agree that, in a war, anything goes?

I'd actually say that in general, forcing someone to use a particular

editor is pretty rude. However, most rational people I know who do

substantial amounts of programming agree that it is a very well-designed

editor. I would say that, as is the case with LaTeX, it is almost

certainly worth the learning curve. Personally, I prefer gedit on unix,

but I respect vim. I don't know anyone experienced who prefers emacs.

On Fri, April 2, 2010 1:29 pm, Ernesto Alfonso wrote:

> I'd say whoever forces anyone to use vim should be imprisoned as it is a

> form of torture.

>

> On Fri, April 2, 2010 11:27 am, Dan Kilgallin wrote:

>

>> No, I would not agree with that. For example, even in a war, nobody

>> should be forced to program using emacs.

>>

>> On Thu, April 1, 2010 11:34 pm, Ernesto Alfonso wrote:

>>

>>

>>> Although this isnt related to 251, we'd like the input of its TA's

>>> here. Would you agree that, in a war, anything goes?

Sunday, March 14, 2010

Thursday, March 11, 2010

Wednesday, February 03, 2010

Peter Norton

Peter Norton.

Peter Norton.In the 1980s, Norton produced a popular tool to retrieve erased data from DOS disks, which was followed by several other tools which were collectively known as the Norton Utilities.

Subscribe to:

Comments (Atom)